How artificial intelligence is assisting the digital twin to benefit Houston’s water utilities and citizens.

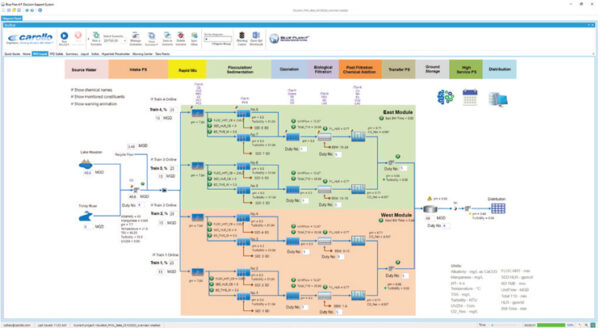

The city of Houston and water authorities in the region have embarked on a multi-year project to construct a major expansion to the Northeast Water Purification Plant (NEWPP). This project will increase capacity from 80 MGD to 400 MGD. Using Carollo’s Blue Plan-it® Decision Support System, a digital-twin type of operation model was developed for the NEWPP to assist the engineers, managers, and operators to virtually experiment with their facility to support operational decisions (Figure 1).

Calibrated using full-scale, pilot-scale, and bench testing data, our digital twin can track flow and mass balance, estimate solids production and chemical usage, simulate truck traffic associated with chemical and solids hauling, and assess power consumption. With several mechanistic-based water treatment analytics integrated, it can be used to assess concentration-time (CT) and predict disinfection byproduct (DBP) formation for the plant’s multi-disinfectant systems, including ozone, chlorine dioxide, chlorine, and chloramine. It can simulate the impacts of chemical additions on water quality, tracking 15 corrosion and stability indices using standard algorithms similar to those used by the RTW model, Water Pro model, EPA WTP Model, etc.

Figure 1. Integrated with the latest machine learning and artificial intelligence analytics, Carollo’s Blue Plan-it WTP Operations Simulator helps water treatment plant managers and operators increase productivity and reduce operational costs.

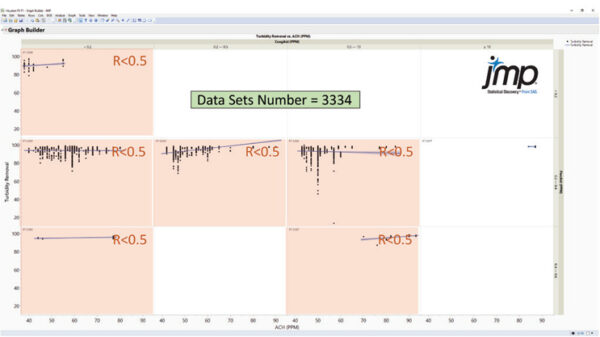

However, one challenging area of the water treatment modeling is how to estimate settled water turbidity and total organic carbon (TOC) when a combination of coagulation/flocculation chemicals (aluminum chloralhydrate [ACH], coagulant aid, floc aid, etc.) is added. No accurate mechanism model is available to simulate the performance of the flocculation and sedimentation process. Empirical models are often limited in their capabilities and accuracy, as shown in Figure 2.

In the past, the plant staff relied on daily jar testing to determine the removal rate of turbidity and TOC under a given chemical dosing scheme. In recent years, zeta potential measurements were introduced, which seemed to have better correlation with chemical dosages. But it is still insufficient to support the development and calibration of a reliable and universally applicable model. A flexible operation support module is desired for the user to: 1) determine the settled water quality based on raw turbidity and TOC along with coagulant and polymer doses; 2) determine the chemical doses based on raw turbidity and TOC as well as the target settled water quality; and 3) determine the coagulant and polymers doses based on raw water quality and a zeta potential target.

Figure 2. A multizone correlation analysis of the historical data demonstrated that the chemical dosages have poor correlation with settled water turbidity and TOC removal, with coefficient of determination (R2) value of less than 0.5.

In recent years, advanced data analytics and machine learning technologies have gained increased popularity in the water industry. Using common computational libraries, users can leverage machine learning to identify patterns of data and generate statistical models without explicit instructions. Fully integrated into the Blue Plan-it Digital Twin models, several machine learning coding methods, such as random forest regressor or K-neighbors regressor, can now be easily applied to supplement our conventional water analytics.

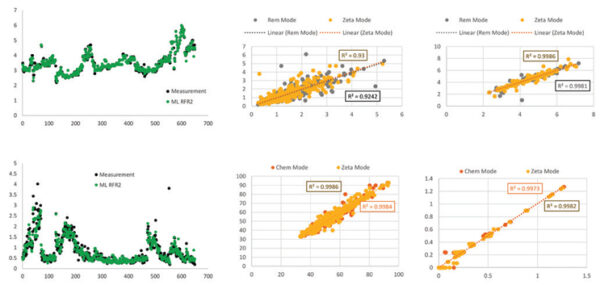

Can We Trust It? The Accuracy Of The Machine Learning Model

Four years of full-scale and jar test data for NEWPP were used for machine learning, with 80% of the data (2,666 data points) used to train the model and 20% of the data (644 data points) used to test the model accuracy. The data included, but are not limited to, raw water TOC, turbidity, and zeta potential; settled water TOC, turbidity, and zeta potential; ACH dosage; flocculation aid dosage; coagulant aid dosage; time; and temperature. The machine learning algorithm can be used in three simulation modes: 1) chemical calculator mode to predict chemical dosages; 2) removal rate mode to predict the settled water TOC and turbidity; and 3) zeta potential mode to predict the amount of chemicals needed to achieve a target zeta potential. When compared using the 20% testing data, the machine learning model accurately predicts settled water quality and chemical dosages with R2 range from 0.93 to 0.99, significantly better than conventionally fitted empirical models (Figure 3).

Figure 3. Machine learning model predicts settled water TOC and turbidity, ACH doses, and coagulant aid doses accurately. (Measured data in black; machine learning predictions in green.)

Once the accuracy of the model was successfully demonstrated, the machine learning module was integrated into the latest version of the NEWPP operations simulators. This innovation was well received by plant managers and operators. It is being actively used for training, troubleshooting, and O&M planning. The plant is collecting additional data day by day, which will be used to retrain the machine learning model. It is expected that the model’s accuracy will continue to improve over time.

What Else Can We Do With It? Other Applications Of Machine Learning In Water Treatment

Instead of considering it as a black box, machine learning, when applied correctly, has been proven useful. It is a particularly good solution when no known mechanisms or correlation are available to predict results and when adjustment of multiple factors (in the case above, dosages of multiple chemicals) ends up with multiple results (e.g., TOC, turbidity, zeta potential, etc.). The next reasonable step to improve the NEWPP digital twin is to apply machine learning to model granular media filtration process. It is expected that this will improve the current empirical ways for estimating filtered water quality, filter backwash frequency, and unit filter run volume (UFRV). This will result in more accurate simulation of disinfectant decay and DBP formation using a hybrid data-driven mechanistic model.

Machine learning applications in the water industry can be even more useful than what has been demonstrated above. It can also be used for modeling adsorption process performance to predict media replacement frequency for TOC or per- and polyfluoroalkyl substances (PFAS) removal; membrane process modeling to predict membrane backwash, maintenance wash, and clean-in-place (CIP) frequencies; and model predictive control of wastewater biological treatment.

Additionally, it can also be used to supplement the distribution system water quality modeling. Utilities often have years of data on chlorine residuals, trihalomethanes (THMs), and haloacetic acids (HAAs) in the distribution system. Other data are also relevant, including water quality from each source in the system (bromide, UV254, pH, temperature, dissolved organic carbon [DOC], etc.), chlorine dosage at each injection point, and information required to estimate water age and source contribution in the entire distribution system.

A machine learning model, which constantly receives and processes real time data and frequently gets retrained in an automated manner, could be instrumental to cope with such complexity and enhance the dynamic nature of the distribution system operation. Often the issue is not lack of data, but lack of an established approach to turn the data into knowledge. To cope with this challenge, Carollo is working with utilities to establish a data pipeline for accumulating data semi- or fully-automatically from various sources (SCADA, lab information management systems [LIMS], data loggers, United States Geological Survey [USGS] website, etc.) to feed the digital twin. A strong data processing module is also being integrated into Blue Plan-it to scrub, downsize, resample, and process the raw information into useful data that can feed the model.

Source: WaterOnline